What is Regression?And Linear Regression

A statistical measure that attempts to determine the strength of the

relationship between one dependent variable (usually denoted by Y) and a

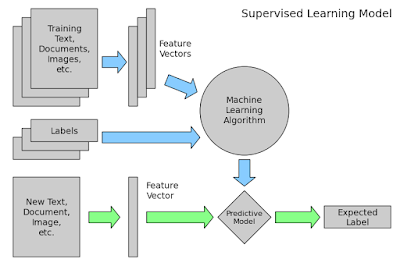

series of other changing variables (known as independent variables). Regression is very powerful technique for prediction in Machine Learning. Regression is Supervised Learning type algorithm.

Different types of Regression:

- Linear Regression

- Logistic Regression

Linear Regression:

In simple linear regression, we predict

scores on one variable from the scores on a second variable.

The variable we are predicting is called the criterion

variable and is referred to

as Y. The variable we are basing our predictions on is called

the predictor variable and is

referred to as X. When there is only one predictor variable,

the prediction method is called simple regression.

In simple linear regression, the topic of this section, the predictions

of Y when plotted as a function of X form a straight line.

A linear regression line has an equation of the form Y = a + bX,

where X is the explanatory variable and Y is the dependent variable.

The slope of the line is b, and a is the intercept (the value of

y when x = 0).

Suppose, data about Temperature is as given below in Table - 1

Table - 1

We can obtain scatter plot of given data in Table - 1 in Cartesian coordinate system as show in Plot - 1,

Plot - 1

It is clear that no line can be found to pass through all points of

the plot. Thus no functional relation exists between the two variables x and Y.

However, the scatter plot does give an indication that a straight line

may exist such that all the points on the plot are scattered randomly

around this line. A statistical relation is said to exist in this case.

The statistical relation between x and Y may be expressed as follows:

Y = B0 + B1*x + e

The above equation is the linear regression model that can be used to explain the relation between x and Y that is seen on the scatter plot above. In this model, the mean value of Y (abbreviated as E(Y)) is assumed to follow the linear relation:

E(Y) = B0 + B1*x

The actual values of Y

(which are observed as yield from the chemical process from time to

time and are random in nature) are assumed to be the sum of the mean

value, E(Y), and a random error term, e:

Y = E(Y) + e

= B0 + B1*x + e

Y = E(Y) + e

= B0 + B1*x + e

The regression model here is called a simple linear regression model because there is just one independent variable, x,

in the model. In regression models, the independent variables are also

referred to as regressors or predictor variables. The dependent

variable, Y , is also referred to as the response. The slope, B1, and the intercept, B0 , of the line E(Y) = B0 + B1*x are called regression coefficients. The slope, B1, can be interpreted as the change in the mean value of Y for a unit change in x.

Plot - 2

Fitted Regression Line

The true regression line is usually not known. However, the regression line can be estimated by estimating the coefficients  and

and  for an observed data set. The estimates,

for an observed data set. The estimates,  and

and  , are calculated using least squares. The estimated regression line, obtained using the values of

, are calculated using least squares. The estimated regression line, obtained using the values of  and

and  , is called the fitted line. The least square estimates,

, is called the fitted line. The least square estimates,  and

and  , are obtained using the following equations:

, are obtained using the following equations:

where  is the mean of all the observed values and

is the mean of all the observed values and  is the mean of all values of the predictor variable at which the observations were taken.

is the mean of all values of the predictor variable at which the observations were taken.  is calculated using

is calculated using  and

and  is calculated using

is calculated using  .

.

Once  and

and  are known, the fitted regression line can be written as:

are known, the fitted regression line can be written as:

where  is the fitted or estimated value based on the fitted regression model. It is an estimate of the mean value,

is the fitted or estimated value based on the fitted regression model. It is an estimate of the mean value,  . The fitted value,

. The fitted value, , for a given value of the predictor variable,

, for a given value of the predictor variable,  , may be different from the corresponding observed value,

, may be different from the corresponding observed value,  . The difference between the two values is called the residual,

. The difference between the two values is called the residual,  :

:

Calculation of the Fitted Line Using Least Square Estimates

The least square estimates of the regression coefficients can be obtained for the data in the preceding Table - 1 as follows:

Knowing both the coefficient ,the fitted regression line is:

This line is shown in the Plot - 3 below.

Plot - 3

Now, we can predict value of Y for any given value of x by just putting the value of x in Regression Line.